Explore

Fine-tune FLUX fast

Customize FLUX.1 [dev] with the fast FLUX trainer on Replicate

Train the model to recognize and generate new concepts using a small set of example images, for specific styles, characters, or objects. It's fast (under 2 minutes), cheap (under $2), and gives you a warm, runnable model plus LoRA weights to download.

Featured models

minimax / hailuo-02

Hailuo 2 is a text-to-video and image-to-video model that can make 6s or 10s videos at 768p (standard) or 1080p (pro). It excels at real world physics.

minimax / hailuo-02-fast

A low cost and fast version of Hailuo 02. Generate 6s and 10s videos in 512p

bytedance / omni-human

Turns your audio/video/images into professional-quality animated videos

google / veo-3-fast

A faster and cheaper version of Google’s Veo 3 video model, with audio

google / veo-3

Sound on: Google’s flagship Veo 3 text to video model, with audio

flux-kontext-apps / kontext-emoji-maker

Use kontext to turn any image into an emoji, using a lora by starsfriday

wan-video / wan-2.2-t2v-fast

A very fast and cheap PrunaAI optimized version of Wan 2.2 A14B text-to-video

black-forest-labs / flux-krea-dev

An opinionated text-to-image model from Black Forest Labs in collaboration with Krea that excels in photorealism. Creates images that avoid the oversaturated "AI look".

wan-video / wan-2.2-i2v-a14b

Image-to-video at 720p and 480p with Wan 2.2 A14B

Official models

Official models are always on, maintained, and have predictable pricing.

I want to…

Generate images

Use AI To Generate Images & Photos with an API

Caption videos

Use AI To Caption Videos with an API

Generate speech

Convert text to speech

Use a face to make images

Make realistic images of people instantly

Generate videos

Use AI To Generate Videos with an API

Upscale images

Upscaling models that create high-quality images from low-quality images

Generate music

Use AI To Generate Music with an API

Edit images

Use AI To Edit Any Image with an API

Transcribe speech

Models that convert speech to text

Extract text from images

Optical character recognition (OCR) and text extraction

Remove backgrounds

Models that remove backgrounds from images and videos

Use the FLUX family of models

The FLUX family of text-to-image models from Black Forest Labs

Restore images

Models that improve or restore images by deblurring, colorization, and removing noise

Enhance videos

Upscaling models that create high-quality video from low-quality videos

Edit Videos

Tools for editing videos.

Videos from images

Use AI To Generate Videos from images with an API

Make videos with Wan

Generate videos with Wan, the fastest and highest quality open-source video generation model.

Use Kontext fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

Caption images

Use AI To Caption Images with an API

Chat with images

Ask language models about images

Use LLMs

Models that can understand and generate text

Make 3D stuff

Models that generate 3D objects, scenes, radiance fields, textures and multi-views.

Use handy tools

Toolbelt-type models for videos and images.

Control image generation

Guide image generation with more than just text. Use edge detection, depth maps, and sketches to get the results you want.

Sing with voices

Voice-to-voice cloning and musical prosody

Get embeddings

Models that generate embeddings from inputs

Try for free

Get started with these models without adding a credit card. Whether you're making videos, generating images, or upscaling photos, these are great starting points.

Use official models

Official models are always on, maintained, and have predictable pricing.

Detect objects

Models that detect or segment objects in images and videos.

Use FLUX fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

Popular models

This is the fastest Flux Dev endpoint in the world, contact us for more at pruna.ai

whisper-large-v3, incredibly fast, with video transcription

Return CLIP features for the clip-vit-large-patch14 model

Practical face restoration algorithm for *old photos* or *AI-generated faces*

SDXL-Lightning by ByteDance: a fast text-to-image model that makes high-quality images in 4 steps

Latest models

Hailuo 2 is a text-to-video and image-to-video model that can make 6s or 10s videos at 768p (standard) or 1080p (pro). It excels at real world physics.

A low cost and fast version of Hailuo 02. Generate 6s and 10s videos in 512p

Turns your audio/video/images into professional-quality animated videos

A faster and cheaper version of Google’s Veo 3 video model, with audio

Use kontext to turn any image into an emoji, using a lora by starsfriday

A very fast and cheap PrunaAI optimized version of Wan 2.2 A14B text-to-video

A very fast and cheap PrunaAI optimized version of Wan 2.2 A14B image-to-video

PartCrafter is a structured 3D mesh generation model that creates multiple parts and objects from a single RGB image.

The image generation model tailored for local development and personal use

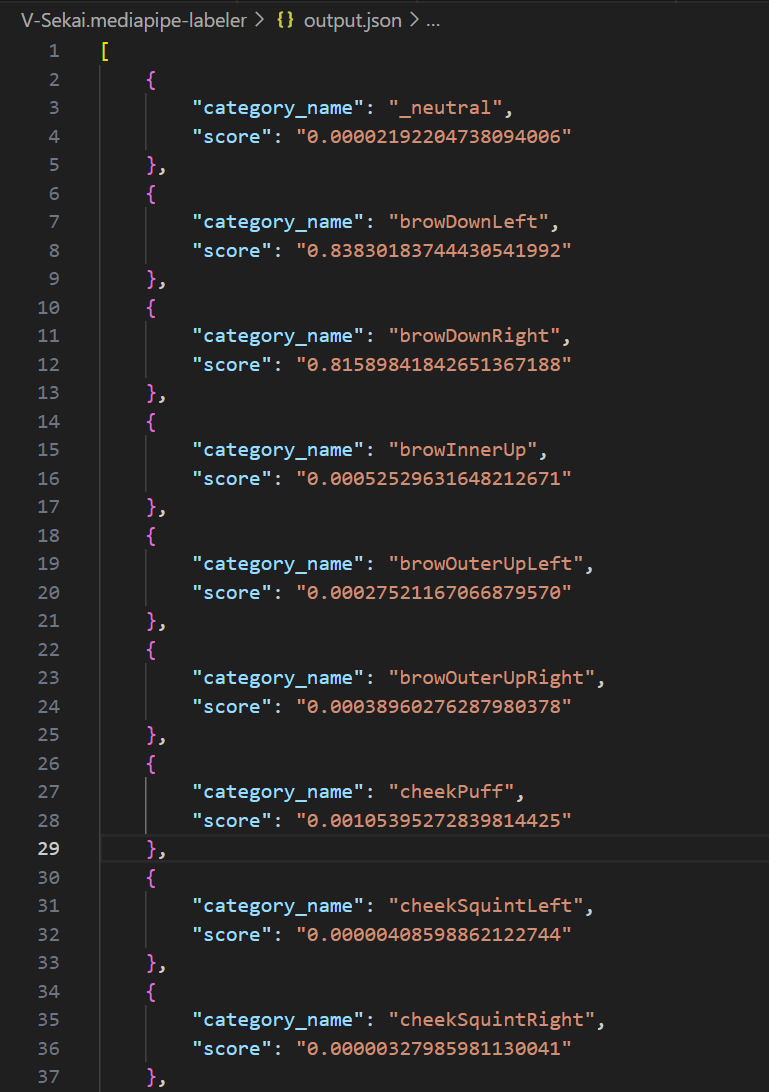

Mediapipe Blendshape Labeler - Predicts the blend shapes of an image.

wan-video/wan-2.2 (all variants) + topazlabs/video-upscale + zsxkib/smart-thinksound

Text-guided image editing model that preserves original details while making targeted modifications like lighting changes, object removal, and style conversion

Official CLIP models, generate CLIP (clip-vit-large-patch14) text & image embeddings

Granite-speech-3.3-8b is a compact and efficient speech-language model, specifically designed for automatic speech recognition (ASR) and automatic speech translation (AST).

Automatically generates expert ThinkSound prompts by analyzing your video w/ Claude 4 - no more struggling with complex audio descriptions

An opinionated text-to-image model from Black Forest Labs in collaboration with Krea that excels in photorealism. Creates images that avoid the oversaturated "AI look".

Voxtral Small is an enhancement of Mistral Small 3 that incorporates state-of-the-art audio input capabilities and excels at speech transcription, translation and audio understanding.

Make a very realistic looking real-world AI video via FLUX 1.1 Pro and Wan 2.2 i2v

Seed-X-PPO-7B by ByteDance-Seed, a powerful series of open-source multilingual translation language models

Generate 6s videos with prompts or images. (Also known as Hailuo). Use a subject reference to make a video with a character and the S2V-01 model.

Granite-3.3-8B-Instruct is a 8-billion parameter 128K context length language model fine-tuned for improved reasoning and instruction-following capabilities.

Granite-vision-3.3-2b is a compact and efficient vision-language model, specifically designed for visual document understanding, enabling automated content extraction from tables, charts, infographics, plots, diagrams, and more.

Higgs Audio v2, a powerful text-to-speech audio foundation model that excels in expressive audio generation

Run any ComfyUI workflow. Guide: https://github.com/replicate/cog-comfyui

🎤The best open-source speech-to-text model as of Jul 2025, transcribing audio with record 5.63% WER and enabling AI tasks like summarization directly from speech✨

InScene is a LoRA by Peter O’Malley (POM) that's designed to generate images that maintain scene consistency with a source image. It is trained on top of Flux.1-Kontext.dev.

A video generation model that offers text-to-video and image-to-video support for 5s or 10s videos, at 480p and 720p resolution

A pro version of Seedance that offers text-to-video and image-to-video support for 5s or 10s videos, at 480p and 1080p resolution

Edit an image with a prompt. This is the hidream-e1.1 model accelerated with the pruna optimisation engine.

MOSS-TTSD (text to spoken dialogue) is an open-source bilingual spoken dialogue synthesis model that supports both Chinese and English. It can transform dialogue scripts between two speakers into natural, expressive conversational speech.

Generate an image using the previously generated image as the input with a recursive prompt.

Accelerated variant of Photon prioritizing speed while maintaining quality